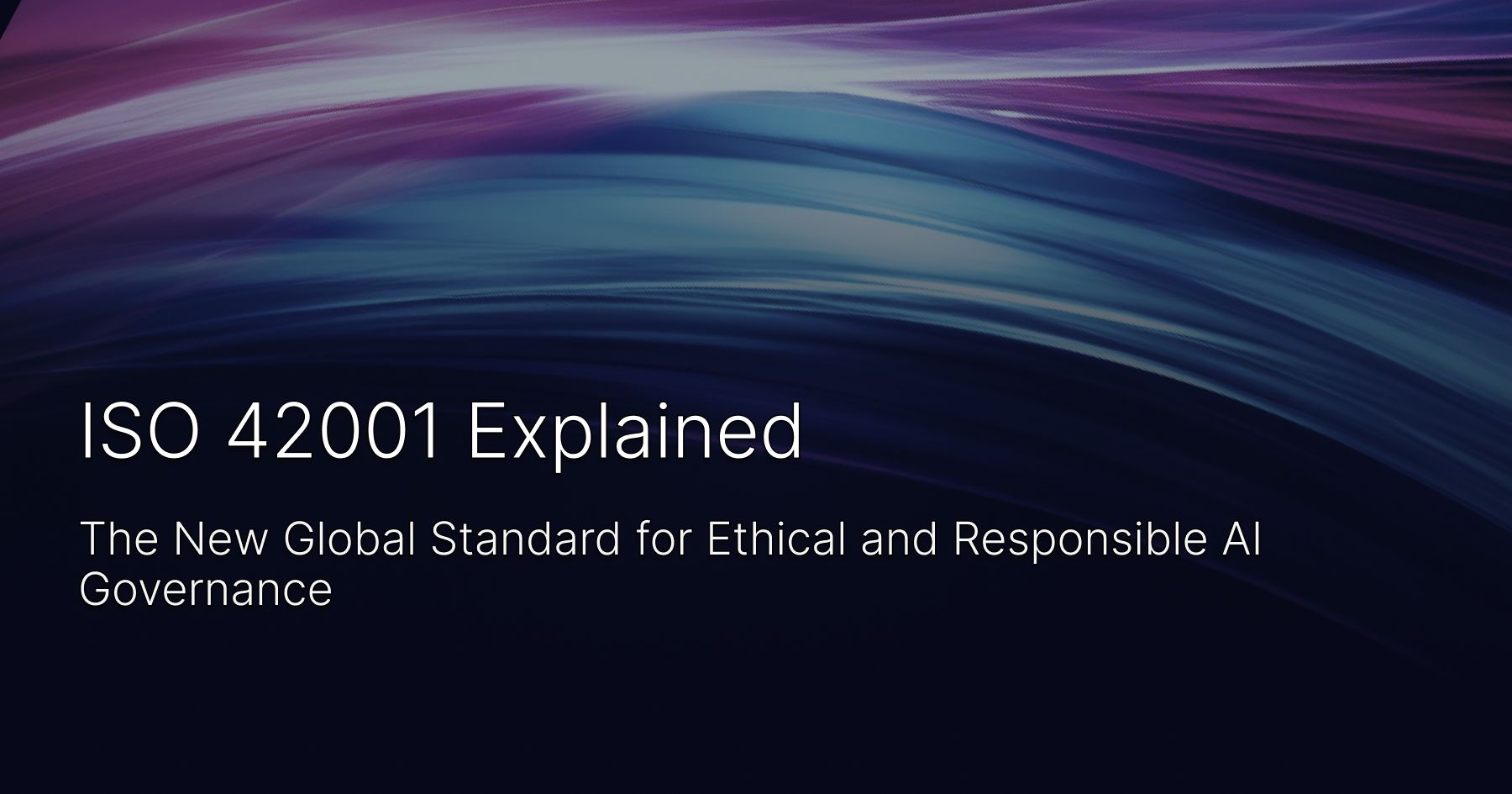

ISO 42001 Explained: The New Global Standard for Ethical and Responsible AI Governance

ISO 42001 Explained: The New Global Standard for Ethical and Responsible AI Governance

Artificial Intelligence (AI) is transforming every industry—but with great power comes great responsibility. As governments and consumers demand more transparency and ethical behavior from AI systems, organizations need a framework to manage these technologies safely and responsibly.

Enter ISO/IEC 42001:2023, the first international standard for Artificial Intelligence Management Systems (AIMS).

What Is ISO 42001?

ISO/IEC 42001 is a globally recognized standard developed by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC). It offers a structured approach for organizations to develop, deploy, and govern AI systems in a trustworthy, ethical, and legally compliant way.

Whether you're creating AI models or using third-party AI tools, ISO 42001 ensures your AI practices meet the highest global benchmarks for responsibility and risk management.

Why ISO 42001 Matters

✅ Mitigate AI Risks

One of the most critical challenges organizations face with artificial intelligence is managing the unique and evolving risks that come with it. Unlike traditional IT systems, AI introduces dynamic, probabilistic behavior that can be hard to predict or control.

ISO/IEC 42001 helps organizations identify, assess, and mitigate these AI-specific risks systematically, ensuring responsible and reliable outcomes across the AI lifecycle.

Key Risk Areas Addressed by ISO 42001:

-

Algorithmic Bias & Discrimination

AI systems can inadvertently reflect or amplify social biases present in training data. ISO 42001 guides organizations in detecting and minimizing bias through inclusive data practices, testing, and fairness audits. -

Opaque Decision-Making (Black Box AI)

Many AI models, particularly deep learning systems, lack transparency. The standard emphasizes explainability and traceability, enabling better accountability and user trust. -

Data Quality & Integrity Risks

Poor data can lead to inaccurate predictions or flawed insights. ISO 42001 outlines controls for ensuring that data used in training and inference is accurate, relevant, and up-to-date. -

Security & Adversarial Threats

AI systems can be vulnerable to adversarial attacks (e.g., manipulated inputs). The standard encourages robust security practices that address threats specific to machine learning systems. -

Unintended Consequences

AI can behave unpredictably in real-world scenarios, especially in dynamic environments. ISO 42001 promotes simulation, monitoring, and fallback strategies to prevent harm or disruption. -

Over-Reliance on Automation

When users place too much trust in AI outputs, critical thinking can be bypassed. The standard stresses the importance of human-in-the-loop oversight and clear escalation

✅ Improve Transparency & Accountability

One of the most pressing concerns in AI adoption is the lack of visibility into how decisions are made. Black-box models, unclear data sources, and ambiguous roles can lead to serious consequences—from compliance violations to reputational damage.

ISO/IEC 42001 addresses this by requiring organizations to build strong governance structures that enforce transparency, traceability, and accountability at every stage of the AI lifecycle.

Key Components of Transparency and Accountability in ISO 42001:

-

Explainability

AI systems must be designed to produce outcomes that can be understood by users, regulators, and stakeholders. ISO 42001 encourages the use of interpretable models where possible and requires that organizations document and communicate how decisions are made—even for complex or opaque systems. -

Auditability & Traceability

The standard emphasizes maintaining logs and records of AI system behavior, data lineage, model versions, and decision flows. This allows organizations to trace back decisions, identify root causes of failures, and demonstrate compliance during audits. -

Human Oversight & Control

Rather than relying solely on autonomous systems, ISO 42001 promotes “human-in-the-loop” or “human-on-the-loop” governance. It ensures that there is always a designated individual or role responsible for monitoring AI behavior and intervening when necessary. -

Defined Roles & Responsibilities

Transparency also depends on accountability. ISO 42001 requires organizations to define clear roles—such as AI ethics officers, model risk managers, or compliance leads—so that accountability for AI decisions is not ambiguous or decentralized. -

Stakeholder Communication

The standard encourages organizations to communicate how their AI systems work and what safeguards are in place. This fosters public trust, especially in sensitive domains like finance, healthcare, and public services.

Example in Action:

Consider a hospital using AI to recommend cancer treatments. With ISO 42001 in place, clinicians and patients would have access to:

- A documented explanation of how the AI reached its recommendation

- A human doctor responsible for final decision-making

- A logged record of the algorithm's parameters, data inputs, and version history

This not only helps ensure medical accountability but also builds confidence and trust in AI-assisted care.

By embracing transparency and accountability through ISO 42001, organizations can de-risk AI deployment, meet regulatory demands, and build systems that people actually trust and understand.

✅ Ensure Regulatory Compliance

As artificial intelligence becomes increasingly integrated into products, services, and decision-making processes, regulatory scrutiny is ramping up worldwide. From the EU AI Act to sector-specific guidelines in healthcare, finance, and public safety, organizations are under pressure to prove that their AI systems operate in a lawful, ethical, and transparent manner.

ISO/IEC 42001 provides a structured, proactive approach to compliance with global and regional AI laws, enabling organizations to stay ahead of current and future regulatory demands.

How ISO 42001 Supports Compliance:

-

Alignment with the EU AI Act

The EU AI Act classifies AI systems by risk level and mandates rigorous documentation, transparency, and oversight—especially for “high-risk” AI applications. ISO 42001 helps meet these obligations through its built-in risk management, impact assessment, and human oversight mechanisms. -

Support for Data Protection Laws (e.g., GDPR, CCPA)

AI systems often process personal data, making compliance with data protection laws critical. ISO 42001 reinforces principles like data minimization, transparency, and lawful data usage, which align closely with GDPR and similar frameworks. -

Audit-Ready Documentation

Regulators increasingly require evidence that organizations understand and control their AI systems. ISO 42001 mandates thorough documentation of AI design, training, performance metrics, and risk controls—making it easier to demonstrate due diligence during regulatory reviews. -

Ethical Risk Mitigation

Many new regulations go beyond technical compliance to emphasize ethical concerns like discrimination, fairness, and accountability. ISO 42001 addresses these topics explicitly, offering organizations a way to operationalize AI ethics into day-to-day processes. -

Global Interoperability

As AI governance continues to evolve, ISO 42001 serves as a universal standard that bridges regulatory expectations across regions—making it ideal for multinational organizations seeking consistency in compliance practices.

Example in Action:

A fintech company developing an AI-based credit scoring tool must comply with the EU AI Act and GDPR. By adopting ISO 42001, the company can:

- Classify the system’s risk level

- Implement controls for data protection and bias prevention

- Maintain records for regulatory inspection

- Clearly define roles and responsibilities across teams

This ensures not only legal compliance but also a reputation for responsible innovation in a highly regulated industry.

✅ Build Customer Trust

In today’s digital economy, trust is the currency of innovation—especially when it comes to artificial intelligence. Customers, clients, and users are increasingly aware of the potential risks associated with AI, from data misuse and surveillance to bias in automated decisions. They want to know that the technologies they rely on are safe, fair, and ethically developed.

ISO/IEC 42001 provides a globally recognized framework that helps organizations prove their commitment to responsible AI, reinforcing trust with users, partners, and the public.

Why Trust Matters in AI:

- AI is invisible but impactful — People often don’t see how AI affects their lives, but they feel the consequences, whether it's a declined loan application or a content moderation decision.

- High-profile failures erode confidence — Data breaches, biased algorithms, and opaque decisions have made customers wary of unchecked AI deployment.

- Trust influences adoption — Users are more likely to engage with AI-driven services if they believe the organization behind them has their best interests in mind.

How ISO 42001 Helps Build Trust:

-

Third-Party Validation

Certification under ISO 42001 demonstrates that your organization has implemented strong AI governance practices verified by an independent authority. This sends a powerful message: we don’t just talk about ethical AI—we prove it. -

Transparent AI Practices

By requiring clear documentation, explainability mechanisms, and user communication protocols, ISO 42001 helps ensure that customers know how and why AI systems make decisions that affect them. -

Human Oversight & Appeal Channels

Users feel safer knowing there’s a human they can contact or appeal to if an AI system makes a questionable decision. ISO 42001 embeds human accountability into the design of AI workflows. -

Ethical Risk Controls

The standard addresses fairness, inclusiveness, and non-discrimination, giving customers confidence that your AI isn’t reinforcing bias or exploiting sensitive data. -

Consistency Across Channels

Whether customers interact with your chatbot, app, or decision-support tools, ISO 42001 ensures the same level of ethical rigor and reliability is applied throughout the AI ecosystem.

Example in Action:

A consumer-facing insurance company uses AI to assess claims. With ISO 42001 certification, the company can:

- Publicly communicate its responsible AI policies

- Ensure customers understand how AI is involved in their claim processing

- Provide assurance that decisions are monitored and appealable

- Build loyalty by showing that it values fairness, privacy, and transparency

By aligning with ISO 42001, you’re not just managing AI responsibly—you’re creating a brand that customers trust to use AI ethically, transparently, and in service of real human needs.

✅ Maintain Competitive Advantage

As AI becomes a core driver of innovation across industries, the organizations that manage it responsibly, transparently, and sustainably will stand out from the competition. In a landscape where trust, compliance, and ethics are becoming key differentiators, ISO/IEC 42001 positions your business as a forward-thinking leader in AI.

Why Responsible AI Is a Business Advantage:

- Trust drives brand loyalty — Customers, partners, and investors are more likely to engage with companies that demonstrate a commitment to ethical AI use.

- Regulatory readiness reduces operational risk — Companies that are prepared for future AI regulations avoid costly disruptions, fines, and reputational damage.

- Efficiency and standardization improve scalability — ISO 42001 introduces repeatable governance processes that reduce rework and streamline AI operations across teams and geographies.

- Certification creates market signal — An ISO 42001 certification acts as a competitive badge, distinguishing you in RFPs, vendor assessments, and public-sector partnerships.

Strategic Benefits of ISO 42001 Adoption:

-

Accelerate Time-to-Market for AI Solutions

With standardized risk assessments, testing protocols, and approval workflows, organizations can develop and deploy AI faster—without sacrificing safety or quality. -

Win High-Stakes Contracts

Government and enterprise clients are increasingly requiring AI governance assurances in procurement. ISO 42001 helps meet these requirements and opens doors to new business opportunities. -

Attract Top Talent

Engineers, data scientists, and AI researchers want to work for companies that build responsible technology. ISO 42001 signals that your company cares about the societal impact of AI. -

Future-Proof Your Innovation

By aligning with a globally accepted standard, you reduce the need for costly rework every time regulations shift or ethical concerns surface. ISO 42001 offers a sustainable foundation for AI innovation. -

Enhance Investor and Stakeholder Confidence

In a world where ESG (Environmental, Social, and Governance) criteria influence investment, ISO 42001 supports the "S" and "G" pillars by demonstrating ethical AI governance.

Who Needs ISO/IEC 42001?

- AI product developers looking to align with ethical and legal standards.

- Organizations using AI in business operations or decision-making.

- Heavily regulated sectors like healthcare, finance, and public services.

- Auditors and consultants supporting responsible AI transformation.

Key Features of ISO 42001

- AI-specific risk assessment frameworks

- Lifecycle management for data and models

- Human-centric governance principles

- Alignment with ISO/IEC 27001 and other ISO management standards

- Audit-ready structure and documentation

How to Implement ISO 42001

Getting started with ISO/IEC 42001 involves a few critical steps:

- Conduct an AI maturity assessment

- Define AI governance policies and objectives

- Integrate AI risk management processes

- Train stakeholders on roles and responsibilities

- Pursue ISO 42001 certification through an accredited body

The Future of Responsible AI Starts Here

As AI continues to evolve, so do expectations for transparency, accountability, and fairness. ISO 42001 is your pathway to operationalizing responsible AI and ensuring your organization is future-proof and regulation-ready.

🔎 Ready to take the next step?

Whether you’re just exploring AI governance or preparing for certification, we can help.

Contact us today to learn how your organization can become ISO 42001 certified and lead with confidence.

SOC2 Type II

SOC2 Type II